macro-economy

Comrade Pan Gongsheng was appointed Party Secretary of the People’s Bank of China.

According to the central bank, on the afternoon of July 1, 2023, the People’s Bank of China held a meeting of leading cadres. The responsible comrades of the Organization Department of the Central Committee announced the central decision: Comrade Pan Gongsheng was appointed Party Secretary of the People’s Bank of China, and Comrade Guo Shuqing was removed from the post of Party Secretary of the People’s Bank of China and Comrade Yi Gang was removed from the post of Deputy Secretary of the Party Committee of the People’s Bank of China.

Ministry of Finance: From January to May, the national lottery sales totaled 225.171 billion yuan, a year-on-year increase of 50.0%.

According to the data of the Ministry of Finance, in May, the national lottery sales totaled 50.021 billion yuan, an increase of 17.255 billion yuan or 52.7%. Among them, the sales of welfare lottery institutions was 16.387 billion yuan, an increase of 3.245 billion yuan, an increase of 24.7%; The sales of sports lottery institutions reached 33.634 billion yuan, up 14.010 billion yuan or 71.4% year-on-year. Mainly affected by the low base of lottery sales in the same period of last year, the driving effect of mainstream events and the supporting marketing of instant lottery tickets, lottery sales increased rapidly compared with the same period of last year. From January to May, the national lottery sales totaled 225.171 billion yuan, an increase of 75.088 billion yuan or 50.0%. Among them, the sales of welfare lottery institutions was 71.781 billion yuan, an increase of 9.998 billion yuan or 16.2%; The sales of sports lottery institutions reached 153.39 billion yuan, an increase of 65.09 billion yuan or 73.7%.

Two departments: by 2035, establish a sound policy system and market mechanism for unconventional water use.

"China Water Conservancy" WeChat WeChat official account July 1 news, recently, the Ministry of Water Resources and the National Development and Reform Commission jointly issued the "Guiding Opinions on Strengthening the Allocation and Utilization of Unconventional Water Resources". In accordance with the basic principles of scientific planning, unified allocation, target management, full use of applications, adapting measures to local conditions, precise policies, policy incentives and market-driven, the Guiding Opinions clearly put forward that by 2025, the utilization of unconventional water sources in China will exceed 17 billion cubic meters, and the utilization rate of reclaimed water in water-deficient cities at prefecture level and above will reach more than 25%; By 2035, a sound policy system and market mechanism for the utilization of unconventional water resources will be established, and the situation of economic, efficient, systematic and safe utilization of unconventional water resources will basically take shape.

Jin Zhuanglong, Minister of Industry and Information Technology, went to China SME Development Promotion Center for investigation.

Jin Zhuanglong pointed out that the Supreme Leader General Secretary attached great importance to the development of small and medium-sized enterprises, and stressed the need to create a good environment for the development of small and medium-sized enterprises, increase support for small and medium-sized enterprises, and strengthen their confidence in development. We should take the theme education as an opportunity to thoroughly study and implement the important exposition of the Supreme Leader General Secretary on the development of small and medium-sized enterprises, carry out in-depth investigation and research on the hot and difficult issues faced by the development of small and medium-sized enterprises, meet the requirements of the Central Committee of the Central Committee of the CPC, and strengthen inspection and rectification. We must adhere to the "two unwavering", pay equal attention to management and service, develop and help at the same time, further improve the work system, policy and regulation system, and high-quality and efficient service system, continuously enhance the competitiveness of enterprises, and stimulate the emergence of more specialized and new enterprises.

Ministry of Commerce spokesman answered questions on Dutch semiconductor export control.

According to the website of the Ministry of Commerce, the spokesman of the Ministry of Commerce answered questions on the export control of semiconductors in the Netherlands. China has noticed relevant reports. In recent months, China and the Netherlands have conducted multi-level and multi-frequency communication and consultation on semiconductor export control. However, the Dutch side finally managed the relevant semiconductor equipment, and China is dissatisfied with this. In recent years, in order to maintain its global hegemony, the United States has constantly generalized the concept of national security, abused export control measures, and even at the expense of its allies’ interests, coerced other countries to suppress and contain semiconductors in China, artificially promoting industrial decoupling and chain breaking, seriously damaging the development of the global semiconductor industry. China firmly opposes this.

China’s first natural gas main pipeline directly to xiong’an new area was put into operation.

The first phase of Mengxi pipeline project (Tianjin-Dingxing, Hebei), a key national natural gas infrastructure interconnection project, was put into operation on June 29th, which is the first natural gas trunk pipeline in China that goes directly to xiong’an new area. The total length of Mengxi pipeline is 1279 kilometers, which passes through Inner Mongolia, Shanxi, Hebei and Tianjin provinces. It is not only the export channel supporting the coal-to-natural gas project in western Inner Mongolia and Datong, Shanxi, but also the export channel for Tianjin LNG to land. The project is implemented according to the overall approval and phased construction. The first phase of Mengxi Pipeline, with a total length of 413.5 kilometers, starts from Tianjin LNG Lingang Offtake Station and ends at Baoding Dingxing Offtake Station in Hebei Province, with a maximum pipe diameter of 1,016 mm and an annual design capacity of 6.6 billion cubic meters.

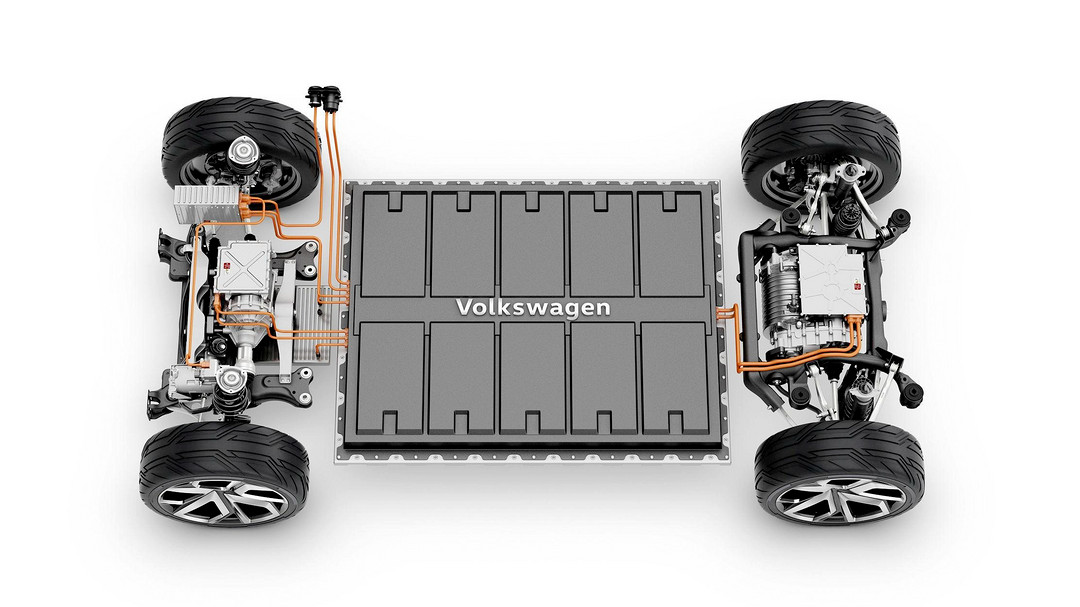

From July 1st, the national automobile will implement the national emission standard 6b stage.

On July 1st, the national automobile emission standard 6b was fully implemented, and it was forbidden to produce, import and sell automobiles that did not meet the national automobile emission standard 6b. Starting today (July 1st), the national automobile emission standard 6b is fully implemented, and it is forbidden to produce, import and sell automobiles that do not meet the national automobile emission standard 6b.

Financial news

The accumulated net purchase of northbound funds in the first half of the year exceeded 180 billion yuan, and three factors attracted foreign capital inflows.

According to the Securities Daily, in the first half of this year, the cumulative net inflow of northbound funds was 183.324 billion yuan, an increase of 15.533% over the same period last year (71.799 billion yuan). In this regard, institutions generally believe that China is still a hot spot for global investors, and the overall trend of economic recovery is clear. Looking forward to the second half of the year, foreign-funded institutions are optimistic about emerging market stocks and suggest moderately over-allocating A shares. Foreign investors are optimistic about the three positive factors of China’s asset endowment. First, China is stepping up efforts to attract and utilize foreign capital. Secondly, China’s economic recovery is improving, and foreign investors’ confidence in China’s assets has increased. Third, the steady growth of listed companies’ performance is an important factor to attract foreign investment. Looking forward to the second half of the year, the expected improvement of the economy is expected to boost the confidence of overseas investors. It is estimated that the net inflow of northbound funds may be 200 billion to 400 billion yuan in 2023.

The chain has increased by more than 25%! The organization bought this 100 billion leader in a big way.

According to () iFinD data, the turnover of A-share block transactions in June reached 68.106 billion yuan, an increase of more than 25% from the previous month. (), () and () are the top three stocks in the cumulative block trading turnover in June. It is worth noting that the recent block transactions of Midea Group are active. On June 30th, Midea Group now made a block transaction of 257 million yuan. The reporter found out that in June, Midea Group had 43 large-scale transactions, with a cumulative turnover exceeding 3.5 billion yuan and institutions buying more than 3 billion yuan.

CSRC: Encourage qualified Public Offering of Fund to set up overseas subsidiaries according to law on the premise of "seeing clearly and managing well".

According to the China Securities Journal, it was learned in the industry that the securities fund institution supervision department of the China Securities Regulatory Commission said in the latest issue of the Bulletin on Institutional Supervision that in recent years, the overseas subsidiaries of Public Offering of Fund Management Company (hereinafter referred to as the overseas subsidiaries of funds) have developed steadily as a whole and played an active role in providing asset allocation services to global customers, improving the international competitiveness of Chinese-funded institutions and promoting the interconnection of capital markets. The overall development of overseas subsidiaries of the Fund has achieved certain results, but there is still room for improvement in brand effect, core competitiveness and function play. In view of this, the CSRC will start from three aspects: supporting fund companies to set up overseas subsidiaries according to law, encouraging overseas subsidiaries of the fund to practice "internal strength" and guiding overseas Chinese-funded institutions to strengthen cooperation, so as to promote the higher quality development of overseas subsidiaries of the fund.

Industry news

Shanghai data exchange helps to build the upstream and downstream data circulation ecology of automobile industry chain

According to the Shanghai Stock Exchange, in order to speed up the mining of data application scenarios and active data product transactions, on June 29th, Shanghai Data Exchange held a DSM series special supply-demand matchmaking meeting on data ecological construction of automobile industry in Shanghai Jiading International Automobile City, the first innovation park focusing on automobile industry in China. The participating enterprises include application service providers, vehicle manufacturers, intelligent solution providers, important parts suppliers and other enterprises in all aspects of the automobile industry chain, including SAIC-GM, Weilai Automobile, LI, Shanghai Jinqiao Intelligent Networked Automobile, Shanghai New Energy Vehicle Public Data Collection and Monitoring Research Center, Shanghai Xinzhi, UFIDA, Tencent Technology, etc. Demand-side enterprises include China Agricultural Bank, (), China Dadi Insurance, China Ping An Property & Casualty Insurance, and third-party business enterprises such as Lingyun Technology.

The sales volume of new car-making forces continued to pick up in June, and the ideal delivery volume exceeded the sum of last year.

On July 1st, the new domestic car manufacturers announced the delivery volume in June. LI’s monthly delivery volume continues to maintain a large leading edge, and for the first time, it has achieved a monthly delivery of 30,000 vehicles; New car companies such as Weilai, Xpeng Motors, and Zero Run continue to pick up. In June, a total of 32,575 new cars were delivered, and the monthly delivery exceeded 30,000 vehicles for the first time, up 150.1% year-on-year. In the second quarter of this year, the cumulative delivery volume in LI reached 86,533 vehicles, up 201.6% year-on-year. In the first half of 2023, the delivery volume of LI reached 139,000 vehicles, which has exceeded the delivery volume in 2022.

Chen Liquan, Academician of China Academy of Engineering: The energy storage industry will usher in an outbreak.

Chen Liquan, an academician of the China Academy of Engineering and a researcher at the Institute of Physics of the Chinese Academy of Sciences, said in Shenzhen on July 1 that under the guidance of the new national energy storage policy, China’s energy storage industry will usher in an explosive period. It is estimated that in 2025, wind power+photovoltaic power generation will account for about 18% of the annual social electricity consumption, and the energy storage market will exceed 100GWh (100 GW h). Yu Jing, deputy director and second-level inspector of Shenzhen Development and Reform Commission, said that Shenzhen attaches great importance to the development of energy storage industry, and has successively issued documents such as "Several Measures for Shenzhen to Support the Accelerated Development of Electrochemical Energy Storage Industry" to accelerate the construction of a trillion-dollar world-class new energy storage industry center.

Company news

Tesla Model S/X will offer a discount of 35,000-45,000 yuan. Model S will start selling at 773,900 yuan.

Tesla announced on the 1st through official website and social platforms that the new Model S/X car incentive activity was officially launched. Users can enjoy preferential benefits ranging from 35,000 to 45,000 yuan by purchasing two new Model S/X models. After the preferential benefits ranging from 35,000 yuan to 45,000 yuan are included, the Model S dual-motor all-wheel drive version starts at 773,900 yuan, and the Model S Plaid three-motor all-wheel drive version starts at 988,900 yuan; The current car price of Model X dual-motor all-wheel drive version starts from 863,900 yuan, and the current car price of Model X Plaid three-motor all-wheel drive version starts from 1,013,900 yuan.

LI delivered 32,575 vehicles in June 2023, breaking 30,000 vehicles for the first time.

On July 1, 2023, LI released the delivery data for June 2023. In June 2023, LI delivered a total of 32,575 new cars, and the monthly delivery volume exceeded 30,000 for the first time, with a year-on-year increase of 150.1%. In the second quarter of this year, the cumulative delivery volume in LI reached 86,533 vehicles, up 201.6% year-on-year. LI’s delivery in the first half of 2023 has exceeded the delivery in the whole year of 2022.

AITO sets up a joint working group on marketing and service, and the cross-border cooperation between Huawei and Cyrus deepens.

It is reported that on June 30, AITO asked the community to issue an internal letter to all partners. The letter emphasizes that AITO has entered a new stage of transition from 1 to N after reaching the milestone of 100,000 production cars off the assembly line in 15 months. In order to support the deeper and closer development of the joint business between () Automobile and Huawei, the two sides decided to set up the "AITO Joint Working Group on Marketing and Service", which is responsible for the end-to-end closed-loop management of marketing, sales, delivery and service.

China Evergrande: Evergrande Real Estate has accumulated about RMB 277.688 billion in outstanding debts.

China Evergrande announced on the Hong Kong Stock Exchange that by the end of May 2023, Evergrande Real Estate had accumulated about RMB 277.688 billion in unpaid debts; By the end of May 2023, the accumulated overdue commercial tickets of Evergrande Real Estate was about RMB 245.418 billion. By the end of May, 2023, there were 1,601 pending litigation cases of Evergrande Real Estate with a target amount of over RMB 30 million, with a total target amount of about RMB 382.94 billion.

Overseas news

U.S. regulators are considering limiting the size of loans that large banks can borrow from the Federal Housing Loan Bank (FHLBs).

According to Cailian, US officials are considering restricting the ability of large banks to use the Federal Housing Loan Bank (FHLBs) as financial backing, which is part of a broader proposal for financial system reform. These changes are being comprehensively reviewed and discussed, or they may become the most influential reshaping of this $1.6 trillion system in decades. It is reported that regulators also discussed requiring banks wishing to borrow from FHLBs to hold a certain proportion of mortgage assets. According to people familiar with the matter, the Federal Housing Finance Agency may adjust its plan before releasing its recommendations in the coming months. Limiting the borrowing capacity of large lenders may also require congressional action. FHLBs has become a focus of controversy. Earlier this year, these institutions provided billions of dollars in loans to Silicon Valley Bank, signatory bank and First Total Bank.

Rich countries, State Street, Morgan Stanley and Morgan Stanley have all raised their dividends, and Wall Street banks have passed the stress test of the Federal Reserve.

Wells Fargo plans to raise its quarterly dividend from 30 cents to 35 cents per share. State Street Bank intends to raise its dividend by 10% to 69 cents per share in the third quarter; Still interested in continuing to buy back shares as planned. Morgan Stanley raised its dividend to 85 cents per share, authorizing the resumption of a $20 billion share repurchase program. JPMorgan Chase plans to raise its dividend to 105 cents a share in the third quarter. New York Mellon raised its quarterly dividend by 14% to 42 cents a share. Truist plans to keep the quarterly stock dividend unchanged at 52 cents per share. Earlier this week, the Federal Reserve announced that more than 20 super-large banks had all passed the annual stress test.

Musk said Twitter will limit the number of tweets users can read.

Musk said on July 1 local time that in order to prevent "extreme level" data capture and system manipulation, Twitter is limiting the number of tweets that users can read: verified accounts are limited to reading 6,000 tweets a day, unverified accounts are limited to 600 tweets a day, and unverified new accounts are limited to 300 tweets a day. Subsequently, Musk increased the number of tweets that can be browsed: 10,000 tweets, 1,000 tweets and 500 tweets can be browsed for three forms of accounts.

Once again, the EU failed to reach an agreement on power market reform.

European Union countries failed to reach an agreement on European electricity market reform again on Friday, and diplomatic sources said that countries still have differences on potential new state-aided power plants. Twenty-seven EU countries are seeking to reach a common position on the reform of the EU electricity market plan, but some countries, including Germany and France, have been difficult to reach an agreement on such elements as the state aid rules for power plants. EU diplomats said. Some people complained that they didn’t have enough time to analyze the document, which was sent to countries by Sweden, which currently holds the rotating presidency of the European Union, on Thursday night. Now, the responsibility for finalizing the agreement will be transferred to Spain, which will take over the EU presidency from July until the end of the year. The planned electricity market reform aims to make the price of electricity in Europe more stable, and avoid repeating the situation that the soaring price of natural gas caused consumers to face high bills during the energy crisis last year. However, whether countries can subsidize existing power plants by signing new fixed-price electricity contracts with governments has caused great controversy.

Riots broke out in France for the fourth consecutive night, and the Minister of the Interior issued a message in the early morning: 270 people were detained.

According to the Tass news agency, in the early morning of July 1, local time, French Interior Minister Darmanin posted a message on social media Twitter that 270 people had been detained by French law enforcement authorities for participating in the riots on the evening of June 30. According to the report, Darmanin wrote on Twitter that at present, 270 people have been detained, among which more than 80 people have been detained in Marseille, and a large number of reinforcements from law enforcement agencies are on their way to Marseille.

President of Russian National Aerospace Group: A new generation of GLONASS -K2 navigation satellite is scheduled to be launched in August.

Borisov, president of Russian National Aerospace Corporation, said in a report to Russian President Vladimir Putin on June 30th local time that Russia currently has an orbital system consisting of 225 satellites of various types, and the new generation of GLONASS -K2 navigation satellite is scheduled to be launched in August this year. The construction of the Russian space station will be completed by 2032, and its efficiency will far exceed the efficiency of the Russian component part of the current International Space Station. He also said that African countries such as Egypt and Algeria and some Asian countries hope to strengthen cooperation with Russia in the space field.

Nokia and Apple signed a long-term patent license agreement, the terms of which are confidential.

Nokia and Apple signed a long-term patent license agreement, the terms of which are confidential; Nokia expects that the agreement will bring revenue from January 2024.